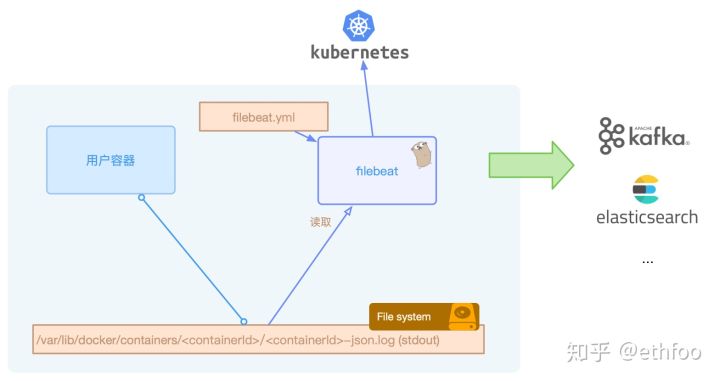

Filebeat 是使用 Golang 实现的轻量型日志采集器,也是 Elasticsearch stack 里面的一员。本质上是一个 agent,可以安装在各个节点上,根据配置读取对应位置的日志,并上报到相应的地方去。

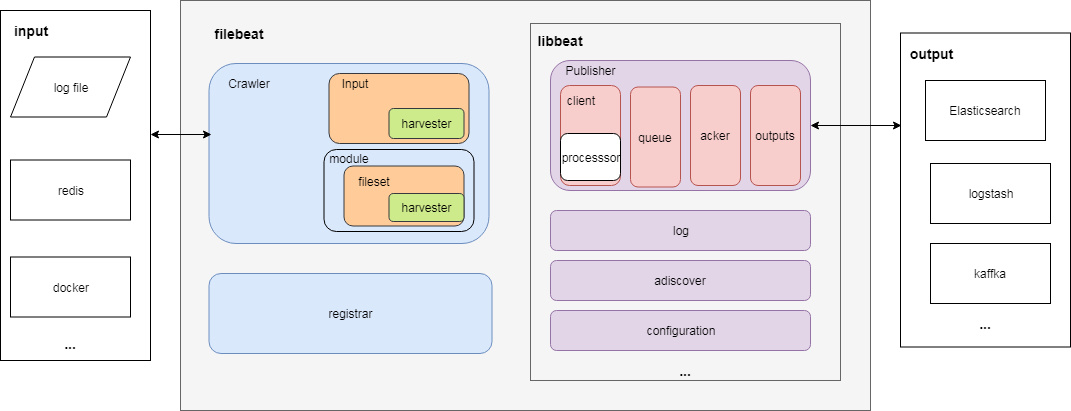

filebeat源码归属于beats项目,而beats项目的设计初衷是为了采集各类的数据,所以beats抽象出了一个libbeat库,基于libbeat我们可以快速的开发实现一个采集的工具,除了filebeat,还有像metricbeat、packetbeat等官方的项目也是在beats工程中。libbeat已经实现了内存缓存队列memqueue、几种output日志发送客户端,数据的过滤处理processor,配置解析、日志打印、事件处理和发送等通用功能,而filebeat只需要实现日志文件的读取等和日志相关的逻辑即可。

beats

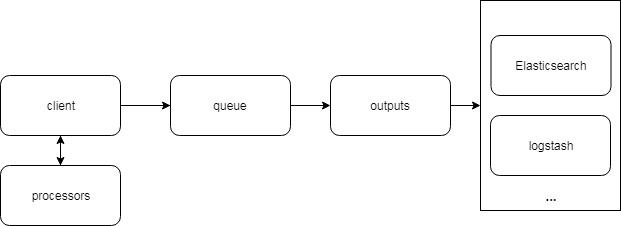

对于任一种beats来说,主要逻辑都包含两个部分:

- 收集数据并转换成事件

- 发送事件到指定的输出

其中第二点已由libbeat实现,因此各个beats实际只需要关心如何收集数据并生成事件后发送给libbeat的Publisher。

filebeat整体架构

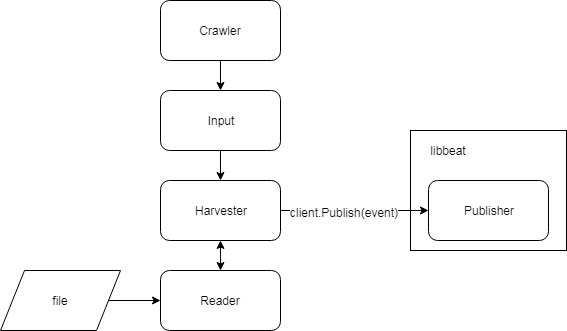

架构图

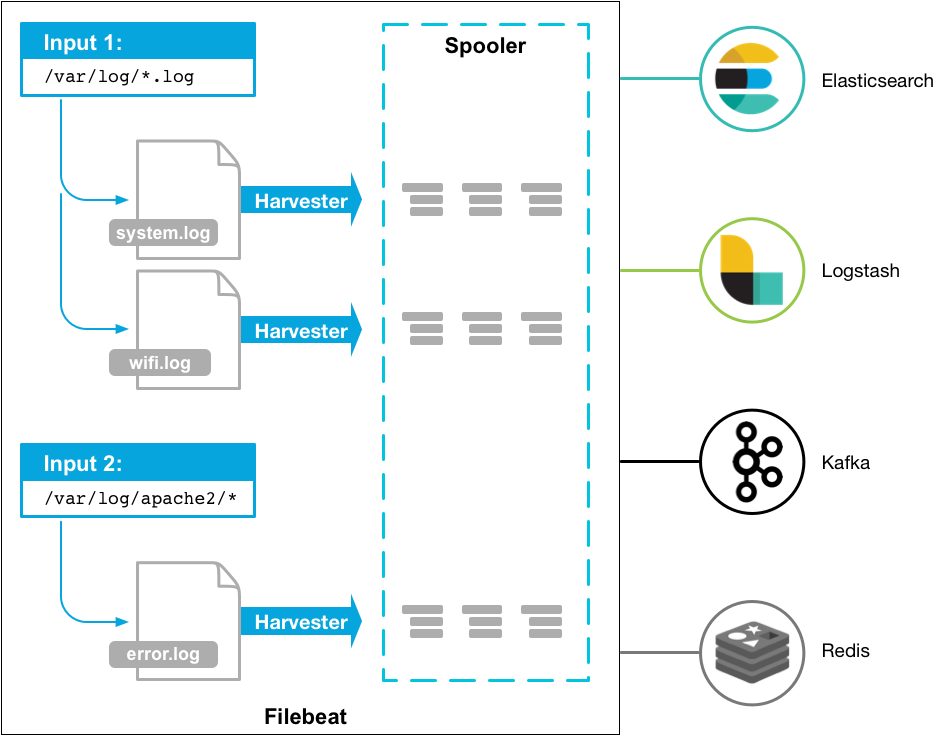

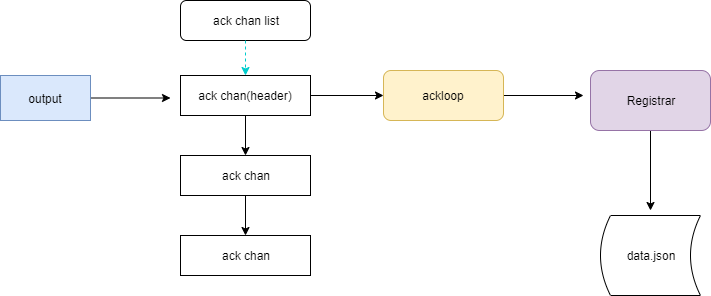

下图是 Filebeat 官方提供的架构图:

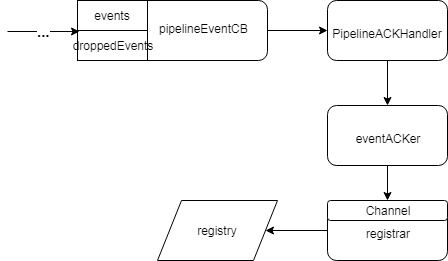

下图是看代码的一些模块组合

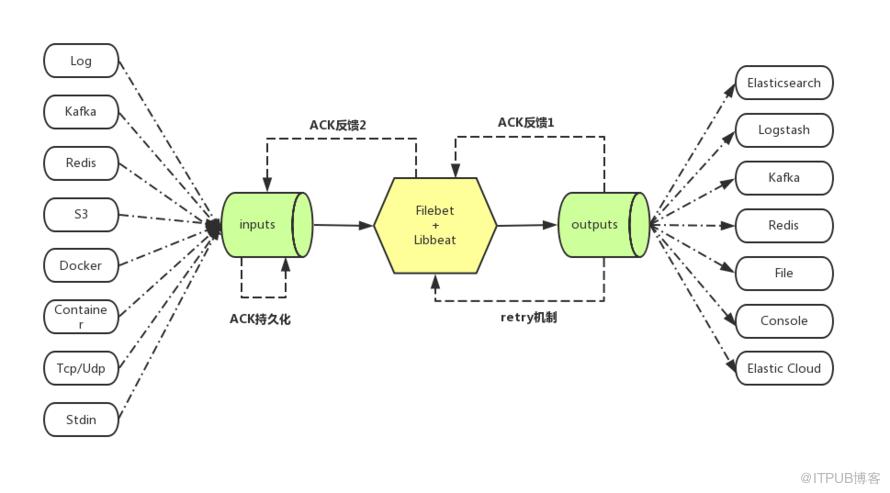

其实我个人觉得这一幅图是最形象的说明了filebeat的功能

模块

除了图中提到的各个模块,整个 filebeat 主要包含以下重要模块:

1.filebeat主要模块

Crawler: 负责管理和启动各个Input,管理所有Input收集数据并发送事件到libbeat的Publisher

Input: 负责管理和解析输入源的信息,以及为每个文件启动 Harvester。可由配置文件指定输入源信息。

Harvester: 负责读取一个文件的数据,对应一个输入源,是收集数据的实际工作者。配置中,一个具体的Input可以包含多个输入源(Harvester)

module: 简化了一些常见程序日志(比如nginx日志)收集、解析、可视化(kibana dashboard)配置项

fileset: module下具体的一种Input定义(比如nginx包括access和error log),包含:

1)输入配置;

2)es ingest node pipeline定义;

3)事件字段定义;

4)示例kibana dashboard

Registrar:接收libbeat反馈回来的ACK, 作相应的持久化,管理记录每个文件处理状态,包括偏移量、文件名等信息。当 Filebeat 启动时,会从 Registrar 恢复文件处理状态。

2.libbeat主要模块

Pipeline(publisher): 负责管理缓存、Harvester 的信息写入以及 Output 的消费等,是 Filebeat 最核心的组件。

client: 提供Publish接口让filebeat将事件发送到Publisher。在发送到队列之前,内部会先调用processors(包括input 内部的processors和全局processors)进行处理。

processor: 事件处理器,可对事件按照配置中的条件进行各种处理(比如删除事件、保留指定字段,过滤添加字段,多行合并等)。配置项

queue: 事件队列,有memqueue(基于内存)和spool(基于磁盘文件)两种实现。配置项

outputs: 事件的输出端,比如ES、Logstash、kafka等。配置项

acker: 事件确认回调,在事件发送成功后进行回调

autodiscover:用于自动发现容器并将其作为输入源

filebeat 的整个生命周期,几个组件共同协作,完成了日志从采集到上报的整个过程。

基本原理(源码解析)

文件目录组织

├── autodiscover # 包含filebeat的autodiscover适配器(adapter),当autodiscover发现新容器时创建对应类型的输入

├── beater # 包含与libbeat库交互相关的文件

├── channel # 包含filebeat输出到pipeline相关的文件

├── config # 包含filebeat配置结构和解析函数

├── crawler # 包含Crawler结构和相关函数

├── fileset # 包含module和fileset相关的结构

├── harvester # 包含Harvester接口定义、Reader接口及实现等

├── input # 包含所有输入类型的实现(比如: log, stdin, syslog)

├── inputsource # 在syslog输入类型中用于读取tcp或udp syslog

├── module # 包含各module和fileset配置

├── modules.d # 包含各module对应的日志路径配置文件,用于修改默认路径

├── processor # 用于从容器日志的事件字段source中提取容器id

├── prospector # 包含旧版本的输入结构Prospector,现已被Input取代

├── registrar # 包含Registrar结构和方法

└── util # 包含beat事件和文件状态的通用结构Data

└── ...

在这些目录中还有一些重要的文件

/beater:包含与libbeat库交互相关的文件:

acker.go: 包含在libbeat设置的ack回调函数,事件成功发送后被调用

channels.go: 包含在ack回调函数中被调用的记录者(logger),包括:

registrarLogger: 将已确认事件写入registrar运行队列

finishedLogger: 统计已确认事件数量

filebeat.go: 包含实现了beater接口的filebeat结构,接口函数包括:

New:创建了filebeat实例

Run:运行filebeat

Stop: 停止filebeat运行

signalwait.go:基于channel实现的等待函数,在filebeat中用于:

等待fileebat结束

等待确认事件被写入registry文件

/channel:filebeat输出(到pipeline)相关的文件

factory.go: 包含OutletFactory,用于创建输出器Outleter对象

interface.go: 定义输出接口Outleter

outlet.go: 实现Outleter,封装了libbeat的pipeline client,其在harvester中被调用用于将事件发送给pipeline

util.go: 定义ack回调的参数结构data,包含beat事件和文件状态

/input:包含Input接口及各种输入类型的Input和Harvester实现

Input:对应配置中的一个Input项,同个Input下可包含多个输入源(比如文件)

Harvester:每个输入源对应一个Harvester,负责实际收集数据、并发送事件到pipeline

/harvester:包含Harvester接口定义、Reader接口及实现等

forwarder.go: Forwarder结构(包含outlet)定义,用于转发事件

harvester.go: Harvester接口定义,具体实现则在/input目录下

registry.go: Registry结构,用于在Input中管理多个Harvester(输入源)的启动和停止

source.go: Source接口定义,表示输入源。目前仅有Pipe一种实现(包含os.File),用在log、stdin和docker输入类型中。btw,这三种输入类型都是用的log input的实现。

/reader目录: Reader接口定义和各种Reader实现

重要数据结构

beats通用事件结构(libbeat/beat/event.go):

type Event struct {

Timestamp time.Time // 收集日志时记录的时间戳,对应es文档中的@timestamp字段

Meta common.MapStr // meta信息,outpus可选的将其作为事件字段输出。比如输出为es且指定了pipeline时,其pipeline id就被包含在此字段中

Fields common.MapStr // 默认输出字段定义在field.yml,其他字段可以在通过fields配置项指定

Private interface{} // for beats private use

}

Crawler(filebeat/crawler/crawler.go):

// Crawler 负责抓取日志并发送到libbeat pipeline

type Crawler struct {

inputs map[uint64]*input.Runner // 包含所有输入的runner

inputConfigs []*common.Config

out channel.Factory

wg sync.WaitGroup

InputsFactory cfgfile.RunnerFactory

ModulesFactory cfgfile.RunnerFactory

modulesReloader *cfgfile.Reloader

inputReloader *cfgfile.Reloader

once bool

beatVersion string

beatDone chan struct{}

}

log类型Input(filebeat/input/log/input.go)

// Input contains the input and its config

type Input struct {

cfg *common.Config

config config

states *file.States

harvesters *harvester.Registry // 包含Input所有Harvester

outlet channel.Outleter // Input共享的Publisher client

stateOutlet channel.Outleter

done chan struct{}

numHarvesters atomic.Uint32

meta map[string]string

}

log类型Harvester(filebeat/input/log/harvester.go):

type Harvester struct {

id uuid.UUID

config config

source harvester.Source // the source being watched

// shutdown handling

done chan struct{}

stopOnce sync.Once

stopWg *sync.WaitGroup

stopLock sync.Mutex

// internal harvester state

state file.State

states *file.States

log *Log

// file reader pipeline

reader reader.Reader

encodingFactory encoding.EncodingFactory

encoding encoding.Encoding

// event/state publishing

outletFactory OutletFactory

publishState func(*util.Data) bool

onTerminate func()

}

Registrar(filebeat/registrar/registrar.go):

type Registrar struct {

Channel chan []file.State

out successLogger

done chan struct{}

registryFile string // Path to the Registry File

fileMode os.FileMode // Permissions to apply on the Registry File

wg sync.WaitGroup

states *file.States // Map with all file paths inside and the corresponding state

gcRequired bool // gcRequired is set if registry state needs to be gc'ed before the next write

gcEnabled bool // gcEnabled indictes the registry contains some state that can be gc'ed in the future

flushTimeout time.Duration

bufferedStateUpdates int

}

libbeat Pipeline(libbeat/publisher/pipeline/pipeline.go)

type Pipeline struct {

beatInfo beat.Info

logger *logp.Logger

queue queue.Queue

output *outputController

observer observer

eventer pipelineEventer

// wait close support

waitCloseMode WaitCloseMode

waitCloseTimeout time.Duration

waitCloser *waitCloser

// pipeline ack

ackMode pipelineACKMode

ackActive atomic.Bool

ackDone chan struct{}

ackBuilder ackBuilder // pipelineEventsACK

eventSema *sema

processors pipelineProcessors

}

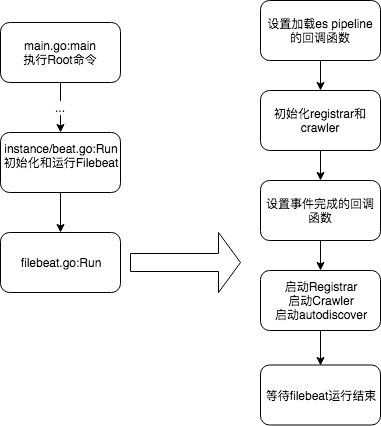

启动

filebeat启动流程图

每个 beat 的构建是独立的。从 filebeat 的入口文件filebeat/main.go可以看到,它向libbeat传递了名字、版本和构造函数来构造自身。跟着走到libbeat/beater/beater.go,我们可以看到程序的启动时的主要工作都是在这里完成的,包括命令行参数的处理、通用配置项的解析,以及最为重要的:调用象征一个beat的生命周期的若干方法

我们来看filebeat的启动过程。

1、执行root命令

在filebeat/main.go文件中,main函数调用了cmd.RootCmd.Execute(),而RootCmd则是在cmd/root.go中被init函数初始化,其中就注册了filebeat.go:New函数以创建实现了beater接口的filebeat实例

对于任意一个beats来说,都需要有:

- 实现Beater接口的具体Beater(如Filebeat);

- 创建该具体Beater的(New)函数。

beater接口定义(beat/beat.go):

type Beater interface {

// The main event loop. This method should block until signalled to stop by an

// invocation of the Stop() method.

Run(b *Beat) error

// Stop is invoked to signal that the Run method should finish its execution.

// It will be invoked at most once.

Stop()

}

2、初始化和运行Filebeat

- 创建libbeat/cmd/instance/beat.go:Beat结构

- 执行(*Beat).launch方法

- (*Beat).Init() 初始化Beat:加载beats公共config

- (*Beat).createBeater

- registerTemplateLoading: 当输出为es时,注册加载es模板的回调函数

- pipeline.Load: 创建Pipeline:包含队列、事件处理器、输出等

- setupMetrics: 安装监控

- filebeat.New: 解析配置(其中输入配置包括配置文件中的Input和module Input)等

- loadDashboards 加载kibana dashboard

- (*Filebeat).Run: 运行filebeat

3、Filebeat运行

- 设置加载es pipeline的回调函数

- 初始化registrar和crawler

- 设置事件完成的回调函数

- 启动Registrar、启动Crawler、启动Autodiscover

- 等待filebeat运行结束

我们再重代码看一下这个启动过程

main.go

package main

import (

"os"

"github.com/elastic/beats/filebeat/cmd"

)

func main() {

if err := cmd.RootCmd.Execute(); err != nil {

os.Exit(1)

}

}

进入到filebeat/cmd执行

package cmd

import (

"flag"

"github.com/spf13/pflag"

"github.com/elastic/beats/filebeat/beater"

cmd "github.com/elastic/beats/libbeat/cmd"

)

// Name of this beat

var Name = "filebeat"

// RootCmd to handle beats cli

var RootCmd *cmd.BeatsRootCmd

func init() {

var runFlags = pflag.NewFlagSet(Name, pflag.ExitOnError)

runFlags.AddGoFlag(flag.CommandLine.Lookup("once"))

runFlags.AddGoFlag(flag.CommandLine.Lookup("modules"))

RootCmd = cmd.GenRootCmdWithRunFlags(Name, "", beater.New, runFlags)

RootCmd.PersistentFlags().AddGoFlag(flag.CommandLine.Lookup("M"))

RootCmd.TestCmd.Flags().AddGoFlag(flag.CommandLine.Lookup("modules"))

RootCmd.SetupCmd.Flags().AddGoFlag(flag.CommandLine.Lookup("modules"))

RootCmd.AddCommand(cmd.GenModulesCmd(Name, "", buildModulesManager))

}

RootCmd 在这一句初始化

RootCmd = cmd.GenRootCmdWithRunFlags(Name, "", beater.New, runFlags)

beater.New跟进去看到是filebeat.go,这个函数会在后面进行调用,来创建filebeat结构体,传递filebeat相关的配置。

func New(b beat.Beat, rawConfig common.Config) (beat.Beater, error) {...}

现在进入GenRootCmdWithRunFlags方法,一路跟进去到GenRootCmdWithSettings,真正的初始化是在这个方法里面。

忽略前面的一段初始化值方法,看到RunCmd的初始化在:

rootCmd.RunCmd = genRunCmd(settings, beatCreator, runFlags)

进入getRunCmd,看到执行代码

err := instance.Run(settings, beatCreator)

跟到\elastic\beats\libbeat\cmd\instance\beat.go的Run方法

b, err := NewBeat(name, idxPrefix, version)

这里新建了beat结构体,同时将filebeat的New方法也传递了进来,就是参数beatCreator,我们可以看到在beat通过launch函数创建了filebeat结构体类型的beater

return b.launch(settings, bt)--->beater, err := b.createBeater(bt)--->beater, err := bt(&b.Beat, sub)

进入launch后,还做了很多的事情

1、还初始化了配置

err := b.InitWithSettings(settings)

pipeline, err := pipeline.Load(b.Info,

pipeline.Monitors{

Metrics: reg,

Telemetry: monitoring.GetNamespace("state").GetRegistry(),

Logger: logp.L().Named("publisher"),

},

b.Config.Pipeline,

b.processing,

b.makeOutputFactory(b.Config.Output),

)

在launch的末尾,还调用了beater启动方法,也就是filebeat的run函数

return beater.Run(&b.Beat)

因为启动的是filebeat,我们到filebeat.go的Run方法

func (fb *Filebeat) Run(b *beat.Beat) error {

var err error

config := fb.config

if !fb.moduleRegistry.Empty() {

err = fb.loadModulesPipelines(b)

if err != nil {

return err

}

}

waitFinished := newSignalWait()

waitEvents := newSignalWait()

// count active events for waiting on shutdown

wgEvents := &eventCounter{

count: monitoring.NewInt(nil, "filebeat.events.active"),

added: monitoring.NewUint(nil, "filebeat.events.added"),

done: monitoring.NewUint(nil, "filebeat.events.done"),

}

finishedLogger := newFinishedLogger(wgEvents)

// Setup registrar to persist state

registrar, err := registrar.New(config.RegistryFile, config.RegistryFilePermissions, config.RegistryFlush, finishedLogger)

if err != nil {

logp.Err("Could not init registrar: %v", err)

return err

}

// Make sure all events that were published in

registrarChannel := newRegistrarLogger(registrar)

err = b.Publisher.SetACKHandler(beat.PipelineACKHandler{

ACKEvents: newEventACKer(finishedLogger, registrarChannel).ackEvents,

})

if err != nil {

logp.Err("Failed to install the registry with the publisher pipeline: %v", err)

return err

}

outDone := make(chan struct{}) // outDone closes down all active pipeline connections

crawler, err := crawler.New(

channel.NewOutletFactory(outDone, wgEvents).Create,

config.Inputs,

b.Info.Version,

fb.done,

*once)

if err != nil {

logp.Err("Could not init crawler: %v", err)

return err

}

// The order of starting and stopping is important. Stopping is inverted to the starting order.

// The current order is: registrar, publisher, spooler, crawler

// That means, crawler is stopped first.

// Start the registrar

err = registrar.Start()

if err != nil {

return fmt.Errorf("Could not start registrar: %v", err)

}

// Stopping registrar will write last state

defer registrar.Stop()

// Stopping publisher (might potentially drop items)

defer func() {

// Closes first the registrar logger to make sure not more events arrive at the registrar

// registrarChannel must be closed first to potentially unblock (pretty unlikely) the publisher

registrarChannel.Close()

close(outDone) // finally close all active connections to publisher pipeline

}()

// Wait for all events to be processed or timeout

defer waitEvents.Wait()

// Create a ES connection factory for dynamic modules pipeline loading

var pipelineLoaderFactory fileset.PipelineLoaderFactory

if b.Config.Output.Name() == "elasticsearch" {

pipelineLoaderFactory = newPipelineLoaderFactory(b.Config.Output.Config())

} else {

logp.Warn(pipelinesWarning)

}

if config.OverwritePipelines {

logp.Debug("modules", "Existing Ingest pipelines will be updated")

}

err = crawler.Start(b.Publisher, registrar, config.ConfigInput, config.ConfigModules, pipelineLoaderFactory, config.OverwritePipelines)

if err != nil {

crawler.Stop()

return err

}

// If run once, add crawler completion check as alternative to done signal

if *once {

runOnce := func() {

logp.Info("Running filebeat once. Waiting for completion ...")

crawler.WaitForCompletion()

logp.Info("All data collection completed. Shutting down.")

}

waitFinished.Add(runOnce)

}

// Register reloadable list of inputs and modules

inputs := cfgfile.NewRunnerList(management.DebugK, crawler.InputsFactory, b.Publisher)

reload.Register.MustRegisterList("filebeat.inputs", inputs)

modules := cfgfile.NewRunnerList(management.DebugK, crawler.ModulesFactory, b.Publisher)

reload.Register.MustRegisterList("filebeat.modules", modules)

var adiscover *autodiscover.Autodiscover

if fb.config.Autodiscover != nil {

adapter := fbautodiscover.NewAutodiscoverAdapter(crawler.InputsFactory, crawler.ModulesFactory)

adiscover, err = autodiscover.NewAutodiscover("filebeat", b.Publisher, adapter, config.Autodiscover)

if err != nil {

return err

}

}

adiscover.Start()

// Add done channel to wait for shutdown signal

waitFinished.AddChan(fb.done)

waitFinished.Wait()

// Stop reloadable lists, autodiscover -> Stop crawler -> stop inputs -> stop harvesters

// Note: waiting for crawlers to stop here in order to install wgEvents.Wait

// after all events have been enqueued for publishing. Otherwise wgEvents.Wait

// or publisher might panic due to concurrent updates.

inputs.Stop()

modules.Stop()

adiscover.Stop()

crawler.Stop()

timeout := fb.config.ShutdownTimeout

// Checks if on shutdown it should wait for all events to be published

waitPublished := fb.config.ShutdownTimeout > 0 || *once

if waitPublished {

// Wait for registrar to finish writing registry

waitEvents.Add(withLog(wgEvents.Wait,

"Continue shutdown: All enqueued events being published."))

// Wait for either timeout or all events having been ACKed by outputs.

if fb.config.ShutdownTimeout > 0 {

logp.Info("Shutdown output timer started. Waiting for max %v.", timeout)

waitEvents.Add(withLog(waitDuration(timeout),

"Continue shutdown: Time out waiting for events being published."))

} else {

waitEvents.AddChan(fb.done)

}

}

return nil

}

构造了registrar和crawler,用于监控文件状态变更和数据采集。然后

err = crawler.Start(b.Publisher, registrar, config.ConfigInput, config.ConfigModules, pipelineLoaderFactory, config.OverwritePipelines)

crawler开始启动采集数据

for _, inputConfig := range c.inputConfigs {

err := c.startInput(pipeline, inputConfig, r.GetStates())

if err != nil {

return err

}

}

crawler的Start方法里面根据每个配置的输入调用一次startInput

func (c *Crawler) startInput(

pipeline beat.Pipeline,

config *common.Config,

states []file.State,

) error {

if !config.Enabled() {

return nil

}

connector := channel.ConnectTo(pipeline, c.out)

p, err := input.New(config, connector, c.beatDone, states, nil)

if err != nil {

return fmt.Errorf("Error in initing input: %s", err)

}

p.Once = c.once

if _, ok := c.inputs[p.ID]; ok {

return fmt.Errorf("Input with same ID already exists: %d", p.ID)

}

c.inputs[p.ID] = p

p.Start()

return nil

}

根据配置的input,构造log/input

func (p *Input) Run() {

logp.Debug("input", "Start next scan")

// TailFiles is like ignore_older = 1ns and only on startup

if p.config.TailFiles {

ignoreOlder := p.config.IgnoreOlder

// Overwrite ignore_older for the first scan

p.config.IgnoreOlder = 1

defer func() {

// Reset ignore_older after first run

p.config.IgnoreOlder = ignoreOlder

// Disable tail_files after the first run

p.config.TailFiles = false

}()

}

p.scan()

// It is important that a first scan is run before cleanup to make sure all new states are read first

if p.config.CleanInactive > 0 || p.config.CleanRemoved {

beforeCount := p.states.Count()

cleanedStates, pendingClean := p.states.Cleanup()

logp.Debug("input", "input states cleaned up. Before: %d, After: %d, Pending: %d",

beforeCount, beforeCount-cleanedStates, pendingClean)

}

// Marking removed files to be cleaned up. Cleanup happens after next scan to make sure all states are updated first

if p.config.CleanRemoved {

for _, state := range p.states.GetStates() {

// os.Stat will return an error in case the file does not exist

stat, err := os.Stat(state.Source)

if err != nil {

if os.IsNotExist(err) {

p.removeState(state)

logp.Debug("input", "Remove state for file as file removed: %s", state.Source)

} else {

logp.Err("input state for %s was not removed: %s", state.Source, err)

}

} else {

// Check if existing source on disk and state are the same. Remove if not the case.

newState := file.NewState(stat, state.Source, p.config.Type, p.meta)

if !newState.FileStateOS.IsSame(state.FileStateOS) {

p.removeState(state)

logp.Debug("input", "Remove state for file as file removed or renamed: %s", state.Source)

}

}

}

}

}

input开始根据配置的输入路径扫描所有符合的文件,并启动harvester

func (p *Input) scan() {

var sortInfos []FileSortInfo

var files []string

paths := p.getFiles()

var err error

if p.config.ScanSort != "" {

sortInfos, err = getSortedFiles(p.config.ScanOrder, p.config.ScanSort, getSortInfos(paths))

if err != nil {

logp.Err("Failed to sort files during scan due to error %s", err)

}

}

if sortInfos == nil {

files = getKeys(paths)

}

for i := 0; i < len(paths); i++ {

var path string

var info os.FileInfo

if sortInfos == nil {

path = files[i]

info = paths[path]

} else {

path = sortInfos[i].path

info = sortInfos[i].info

}

select {

case <-p.done:

logp.Info("Scan aborted because input stopped.")

return

default:

}

newState, err := getFileState(path, info, p)

if err != nil {

logp.Err("Skipping file %s due to error %s", path, err)

}

// Load last state

lastState := p.states.FindPrevious(newState)

// Ignores all files which fall under ignore_older

if p.isIgnoreOlder(newState) {

err := p.handleIgnoreOlder(lastState, newState)

if err != nil {

logp.Err("Updating ignore_older state error: %s", err)

}

continue

}

// Decides if previous state exists

if lastState.IsEmpty() {

logp.Debug("input", "Start harvester for new file: %s", newState.Source)

err := p.startHarvester(newState, 0)

if err == errHarvesterLimit {

logp.Debug("input", harvesterErrMsg, newState.Source, err)

continue

}

if err != nil {

logp.Err(harvesterErrMsg, newState.Source, err)

}

} else {

p.harvestExistingFile(newState, lastState)

}

}

}

在harvest的Run看到一个死循环读取message,预处理之后交由forwarder发送到目标输出

message, err := h.reader.Next()

h.sendEvent(data, forwarder)

至此,整个filebeat的启动到发送数据就理完了

配置文件解析

在libbeat中实现了通用的配置文件解析,在启动的过程中,在每次createbeater时候就会进行config。

调用 cfgfile.Load方法解析到cfg对象,进入load方法

func Load(path string, beatOverrides *common.Config) (*common.Config, error) {

var config *common.Config

var err error

cfgpath := GetPathConfig()

if path == "" {

list := []string{}

for _, cfg := range configfiles.List() {

if !filepath.IsAbs(cfg) {

list = append(list, filepath.Join(cfgpath, cfg))

} else {

list = append(list, cfg)

}

}

config, err = common.LoadFiles(list...)

} else {

if !filepath.IsAbs(path) {

path = filepath.Join(cfgpath, path)

}

config, err = common.LoadFile(path)

}

if err != nil {

return nil, err

}

if beatOverrides != nil {

config, err = common.MergeConfigs(

defaults,

beatOverrides,

config,

overwrites,

)

if err != nil {

return nil, err

}

} else {

config, err = common.MergeConfigs(

defaults,

config,

overwrites,

)

}

config.PrintDebugf("Complete configuration loaded:")

return config, nil

}

如果不输入配置文件,使用configfiles定义文件

configfiles = common.StringArrFlag(nil, "c", "beat.yml", "Configuration file, relative to path.config")

如果输入配置文件进入else分支

config, err = common.LoadFile(path)

根据配置文件构造config对象,使用的是yaml解析库。

c, err := yaml.NewConfigWithFile(path, configOpts...)

pipeline初始化

pipeline的初始化是在libbeat的创建对于的filebeat 的结构体的时候进行的在func (b *Beat) createBeater(bt beat.Creator) (beat.Beater, error) {}函数中

pipeline, err := pipeline.Load(b.Info,

pipeline.Monitors{

Metrics: reg,

Telemetry: monitoring.GetNamespace("state").GetRegistry(),

Logger: logp.L().Named("publisher"),

},

b.Config.Pipeline,

b.processing,

b.makeOutputFactory(b.Config.Output),

)

我们来看看load函数

// Load uses a Config object to create a new complete Pipeline instance with

// configured queue and outputs.

func Load(

beatInfo beat.Info,

monitors Monitors,

config Config,

processors processing.Supporter,

makeOutput func(outputs.Observer) (string, outputs.Group, error),

) (*Pipeline, error) {

log := monitors.Logger

if log == nil {

log = logp.L()

}

if publishDisabled {

log.Info("Dry run mode. All output types except the file based one are disabled.")

}

name := beatInfo.Name

settings := Settings{

WaitClose: 0,

WaitCloseMode: NoWaitOnClose,

Processors: processors,

}

queueBuilder, err := createQueueBuilder(config.Queue, monitors)

if err != nil {

return nil, err

}

out, err := loadOutput(monitors, makeOutput)

if err != nil {

return nil, err

}

p, err := New(beatInfo, monitors, queueBuilder, out, settings)

if err != nil {

return nil, err

}

log.Infof("Beat name: %s", name)

return p, err

}

主要是初始化queue,output,并创建对应的pipeline。

1、queue

queueBuilder, err := createQueueBuilder(config.Queue, monitors)

if err != nil {

return nil, err

}

进入createQueueBuilder

func createQueueBuilder(

config common.ConfigNamespace,

monitors Monitors,

) (func(queue.Eventer) (queue.Queue, error), error) {

queueType := defaultQueueType

if b := config.Name(); b != "" {

queueType = b

}

queueFactory := queue.FindFactory(queueType)

if queueFactory == nil {

return nil, fmt.Errorf("'%v' is no valid queue type", queueType)

}

queueConfig := config.Config()

if queueConfig == nil {

queueConfig = common.NewConfig()

}

if monitors.Telemetry != nil {

queueReg := monitors.Telemetry.NewRegistry("queue")

monitoring.NewString(queueReg, "name").Set(queueType)

}

return func(eventer queue.Eventer) (queue.Queue, error) {

return queueFactory(eventer, monitors.Logger, queueConfig)

}, nil

}

根据queueType(有默认类型mem)找到创建的方法,一般mem就是

func init() {

queue.RegisterType("mem", create)

}

看一下create函数

func create(eventer queue.Eventer, logger *logp.Logger, cfg *common.Config) (queue.Queue, error) {

config := defaultConfig

if err := cfg.Unpack(&config); err != nil {

return nil, err

}

if logger == nil {

logger = logp.L()

}

return NewBroker(logger, Settings{

Eventer: eventer,

Events: config.Events,

FlushMinEvents: config.FlushMinEvents,

FlushTimeout: config.FlushTimeout,

}), nil

}

创建了一个broker

// NewBroker creates a new broker based in-memory queue holding up to sz number of events.

// If waitOnClose is set to true, the broker will block on Close, until all internal

// workers handling incoming messages and ACKs have been shut down.

func NewBroker(

logger logger,

settings Settings,

) *Broker {

// define internal channel size for producer/client requests

// to the broker

chanSize := 20

var (

sz = settings.Events

minEvents = settings.FlushMinEvents

flushTimeout = settings.FlushTimeout

)

if minEvents < 1 {

minEvents = 1

}

if minEvents > 1 && flushTimeout <= 0 {

minEvents = 1

flushTimeout = 0

}

if minEvents > sz {

minEvents = sz

}

if logger == nil {

logger = logp.NewLogger("memqueue")

}

b := &Broker{

done: make(chan struct{}),

logger: logger,

// broker API channels

events: make(chan pushRequest, chanSize),

requests: make(chan getRequest),

pubCancel: make(chan producerCancelRequest, 5),

// internal broker and ACK handler channels

acks: make(chan int),

scheduledACKs: make(chan chanList),

waitOnClose: settings.WaitOnClose,

eventer: settings.Eventer,

}

var eventLoop interface {

run()

processACK(chanList, int)

}

if minEvents > 1 {

eventLoop = newBufferingEventLoop(b, sz, minEvents, flushTimeout)

} else {

eventLoop = newDirectEventLoop(b, sz)

}

b.bufSize = sz

ack := newACKLoop(b, eventLoop.processACK)

b.wg.Add(2)

go func() {

defer b.wg.Done()

eventLoop.run()

}()

go func() {

defer b.wg.Done()

ack.run()

}()

return b

}

broker就是我们的queue,同时创建了一个eventLoop(根据是否有缓存创建不同的结构体,根据配置min_event是否大于1创建BufferingEventLoop或者DirectEventLoop,一般默认都是BufferingEventLoop,即带缓冲的队列。)和ack,调用他们的run函数进行监听

这边特别说明一下eventLoop的new,我们看带缓存的newBufferingEventLoop

func newBufferingEventLoop(b *Broker, size int, minEvents int, flushTimeout time.Duration) *bufferingEventLoop {

l := &bufferingEventLoop{

broker: b,

maxEvents: size,

minEvents: minEvents,

flushTimeout: flushTimeout,

events: b.events,

get: nil,

pubCancel: b.pubCancel,

acks: b.acks,

}

l.buf = newBatchBuffer(l.minEvents)

l.timer = time.NewTimer(flushTimeout)

if !l.timer.Stop() {

<-l.timer.C

}

return l

}

把broker的值很多都赋给了bufferingEventLoop,不知道为什么这么做。

go func() {

defer b.wg.Done()

eventLoop.run()

}()

go func() {

defer b.wg.Done()

ack.run()

}()

我们看一下有缓存的事件处理

func (l *bufferingEventLoop) run() {

var (

broker = l.broker

)

for {

select {

case <-broker.done:

return

case req := <-l.events: // producer pushing new event

l.handleInsert(&req)

case req := <-l.pubCancel: // producer cancelling active events

l.handleCancel(&req)

case req := <-l.get: // consumer asking for next batch

l.handleConsumer(&req)

case l.schedACKS <- l.pendingACKs:

l.schedACKS = nil

l.pendingACKs = chanList{}

case count := <-l.acks:

l.handleACK(count)

case <-l.idleC:

l.idleC = nil

l.timer.Stop()

if l.buf.length() > 0 {

l.flushBuffer()

}

}

}

}

到这里就可以监听队列中的事件了,BufferingEventLoop是一个实现了Broker、带有各种channel的结构,主要用于将日志发送至consumer消费。 BufferingEventLoop的run方法中,同样是一个无限循环,这里可以认为是一个日志事件的调度中心。

再来看看ack的调度

func (l *ackLoop) run() {

var (

// log = l.broker.logger

// Buffer up acked event counter in acked. If acked > 0, acks will be set to

// the broker.acks channel for sending the ACKs while potentially receiving

// new batches from the broker event loop.

// This concurrent bidirectionally communication pattern requiring 'select'

// ensures we can not have any deadlock between the event loop and the ack

// loop, as the ack loop will not block on any channel

acked int

acks chan int

)

for {

select {

case <-l.broker.done:

// TODO: handle pending ACKs?

// TODO: panic on pending batches?

return

case acks <- acked:

acks, acked = nil, 0

case lst := <-l.broker.scheduledACKs:

count, events := lst.count()

l.lst.concat(&lst)

// log.Debug("ACK List:")

// for current := l.lst.head; current != nil; current = current.next {

// log.Debugf(" ack entry(seq=%v, start=%v, count=%v",

// current.seq, current.start, current.count)

// }

l.batchesSched += uint64(count)

l.totalSched += uint64(events)

case <-l.sig:

acked += l.handleBatchSig()

if acked > 0 {

acks = l.broker.acks

}

}

// log.Debug("ackloop INFO")

// log.Debug("ackloop: total events scheduled = ", l.totalSched)

// log.Debug("ackloop: total events ack = ", l.totalACK)

// log.Debug("ackloop: total batches scheduled = ", l.batchesSched)

// log.Debug("ackloop: total batches ack = ", l.batchesACKed)

l.sig = l.lst.channel()

// if l.sig == nil {

// log.Debug("ackloop: no ack scheduled")

// } else {

// log.Debug("ackloop: schedule ack: ", l.lst.head.seq)

// }

}

}

如果有处理信号就发送给regestry进行记录,关于registry在下面详细说明。

2、output

out, err := loadOutput(monitors, makeOutput)

if err != nil {

return nil, err

}

进入loadOutput

func loadOutput(

monitors Monitors,

makeOutput OutputFactory,

) (outputs.Group, error) {

log := monitors.Logger

if log == nil {

log = logp.L()

}

if publishDisabled {

return outputs.Group{}, nil

}

if makeOutput == nil {

return outputs.Group{}, nil

}

var (

metrics *monitoring.Registry

outStats outputs.Observer

)

if monitors.Metrics != nil {

metrics = monitors.Metrics.GetRegistry("output")

if metrics != nil {

metrics.Clear()

} else {

metrics = monitors.Metrics.NewRegistry("output")

}

outStats = outputs.NewStats(metrics)

}

outName, out, err := makeOutput(outStats)

if err != nil {

return outputs.Fail(err)

}

if metrics != nil {

monitoring.NewString(metrics, "type").Set(outName)

}

if monitors.Telemetry != nil {

telemetry := monitors.Telemetry.GetRegistry("output")

if telemetry != nil {

telemetry.Clear()

} else {

telemetry = monitors.Telemetry.NewRegistry("output")

}

monitoring.NewString(telemetry, "name").Set(outName)

}

return out, nil

}

这边是根据load传进来的makeOutput函数来进行创建的,我们看一下load这个参数。

func (b *Beat) makeOutputFactory(

cfg common.ConfigNamespace,

) func(outputs.Observer) (string, outputs.Group, error) {

return func(outStats outputs.Observer) (string, outputs.Group, error) {

out, err := b.createOutput(outStats, cfg)

return cfg.Name(), out, err

}

}

创建createOutput

func (b *Beat) createOutput(stats outputs.Observer, cfg common.ConfigNamespace) (outputs.Group, error) {

if !cfg.IsSet() {

return outputs.Group{}, nil

}

return outputs.Load(b.IdxSupporter, b.Info, stats, cfg.Name(), cfg.Config())

}

再看load

// Load creates and configures a output Group using a configuration object..

func Load(

im IndexManager,

info beat.Info,

stats Observer,

name string,

config *common.Config,

) (Group, error) {

factory := FindFactory(name)

if factory == nil {

return Group{}, fmt.Errorf("output type %v undefined", name)

}

if stats == nil {

stats = NewNilObserver()

}

return factory(im, info, stats, config)

}

可见根据配置文件的配置的output的类型进行创建,比如我们用kafka做为output,我们看一下创建函数

func init() {

sarama.Logger = kafkaLogger{}

outputs.RegisterType("kafka", makeKafka)

}

就是makeKafka

func makeKafka(

_ outputs.IndexManager,

beat beat.Info,

observer outputs.Observer,

cfg *common.Config,

) (outputs.Group, error) {

debugf("initialize kafka output")

config, err := readConfig(cfg)

if err != nil {

return outputs.Fail(err)

}

topic, err := outil.BuildSelectorFromConfig(cfg, outil.Settings{

Key: "topic",

MultiKey: "topics",

EnableSingleOnly: true,

FailEmpty: true,

})

if err != nil {

return outputs.Fail(err)

}

libCfg, err := newSaramaConfig(config)

if err != nil {

return outputs.Fail(err)

}

hosts, err := outputs.ReadHostList(cfg)

if err != nil {

return outputs.Fail(err)

}

codec, err := codec.CreateEncoder(beat, config.Codec)

if err != nil {

return outputs.Fail(err)

}

client, err := newKafkaClient(observer, hosts, beat.IndexPrefix, config.Key, topic, codec, libCfg)

if err != nil {

return outputs.Fail(err)

}

retry := 0

if config.MaxRetries < 0 {

retry = -1

}

return outputs.Success(config.BulkMaxSize, retry, client)

}

直接创建了kafka的client给发送的时候使用。

最后利用上面的两个构建函数来创建我们的pipeline

p, err := New(beatInfo, monitors, queueBuilder, out, settings)

if err != nil {

return nil, err

}

其实上面函数有的调用是在这个new中进行的

// New create a new Pipeline instance from a queue instance and a set of outputs.

// The new pipeline will take ownership of queue and outputs. On Close, the

// queue and outputs will be closed.

func New(

beat beat.Info,

monitors Monitors,

queueFactory queueFactory,

out outputs.Group,

settings Settings,

) (*Pipeline, error) {

var err error

if monitors.Logger == nil {

monitors.Logger = logp.NewLogger("publish")

}

p := &Pipeline{

beatInfo: beat,

monitors: monitors,

observer: nilObserver,

waitCloseMode: settings.WaitCloseMode,

waitCloseTimeout: settings.WaitClose,

processors: settings.Processors,

}

p.ackBuilder = &pipelineEmptyACK{p}

p.ackActive = atomic.MakeBool(true)

if monitors.Metrics != nil {

p.observer = newMetricsObserver(monitors.Metrics)

}

p.eventer.observer = p.observer

p.eventer.modifyable = true

if settings.WaitCloseMode == WaitOnPipelineClose && settings.WaitClose > 0 {

p.waitCloser = &waitCloser{}

// waitCloser decrements counter on queue ACK (not per client)

p.eventer.waitClose = p.waitCloser

}

p.queue, err = queueFactory(&p.eventer)

if err != nil {

return nil, err

}

if count := p.queue.BufferConfig().Events; count > 0 {

p.eventSema = newSema(count)

}

maxEvents := p.queue.BufferConfig().Events

if maxEvents <= 0 {

// Maximum number of events until acker starts blocking.

// Only active if pipeline can drop events.

maxEvents = 64000

}

p.eventSema = newSema(maxEvents)

p.output = newOutputController(beat, monitors, p.observer, p.queue)

p.output.Set(out)

return p, nil

}

创建一个output的控制器outputController

func newOutputController(

beat beat.Info,

monitors Monitors,

observer outputObserver,

b queue.Queue,

) *outputController {

c := &outputController{

beat: beat,

monitors: monitors,

observer: observer,

queue: b,

}

ctx := &batchContext{}

c.consumer = newEventConsumer(monitors.Logger, b, ctx)

c.retryer = newRetryer(monitors.Logger, observer, nil, c.consumer)

ctx.observer = observer

ctx.retryer = c.retryer

c.consumer.sigContinue()

return c

}

同时初始化了eventConsumer和retryer。

func newEventConsumer(

log *logp.Logger,

queue queue.Queue,

ctx *batchContext,

) *eventConsumer {

c := &eventConsumer{

logger: log,

done: make(chan struct{}),

sig: make(chan consumerSignal, 3),

out: nil,

queue: queue,

consumer: queue.Consumer(),

ctx: ctx,

}

c.pause.Store(true)

go c.loop(c.consumer)

return c

}

在eventConsumer中启动了一个监听程序

func (c *eventConsumer) loop(consumer queue.Consumer) {

log := c.logger

log.Debug("start pipeline event consumer")

var (

out workQueue

batch *Batch

paused = true

)

handleSignal := func(sig consumerSignal) {

switch sig.tag {

case sigConsumerCheck:

case sigConsumerUpdateOutput:

c.out = sig.out

case sigConsumerUpdateInput:

consumer = sig.consumer

}

paused = c.paused()

if !paused && c.out != nil && batch != nil {

out = c.out.workQueue

} else {

out = nil

}

}

for {

if !paused && c.out != nil && consumer != nil && batch == nil {

out = c.out.workQueue

queueBatch, err := consumer.Get(c.out.batchSize)

if err != nil {

out = nil

consumer = nil

continue

}

if queueBatch != nil {

batch = newBatch(c.ctx, queueBatch, c.out.timeToLive)

}

paused = c.paused()

if paused || batch == nil {

out = nil

}

}

select {

case sig := <-c.sig:

handleSignal(sig)

continue

default:

}

select {

case <-c.done:

log.Debug("stop pipeline event consumer")

return

case sig := <-c.sig:

handleSignal(sig)

case out <- batch:

batch = nil

}

}

}

用于消费队列中的事件event,并将其构建成Batch,放到处理队列中。

func newBatch(ctx *batchContext, original queue.Batch, ttl int) *Batch {

if original == nil {

panic("empty batch")

}

b := batchPool.Get().(*Batch)

*b = Batch{

original: original,

ctx: ctx,

ttl: ttl,

events: original.Events(),

}

return b

}

再来看看retryer

func newRetryer(

log *logp.Logger,

observer outputObserver,

out workQueue,

c *eventConsumer,

) *retryer {

r := &retryer{

logger: log,

observer: observer,

done: make(chan struct{}),

sig: make(chan retryerSignal, 3),

in: retryQueue(make(chan batchEvent, 3)),

out: out,

consumer: c,

doneWaiter: sync.WaitGroup{},

}

r.doneWaiter.Add(1)

go r.loop()

return r

}

同样启动了监听程序,用于重试。

func (r *retryer) loop() {

defer r.doneWaiter.Done()

var (

out workQueue

consumerBlocked bool

active *Batch

activeSize int

buffer []*Batch

numOutputs int

log = r.logger

)

for {

select {

case <-r.done:

return

case evt := <-r.in:

var (

countFailed int

countDropped int

batch = evt.batch

countRetry = len(batch.events)

)

if evt.tag == retryBatch {

countFailed = len(batch.events)

r.observer.eventsFailed(countFailed)

decBatch(batch)

countRetry = len(batch.events)

countDropped = countFailed - countRetry

r.observer.eventsDropped(countDropped)

}

if len(batch.events) == 0 {

log.Info("Drop batch")

batch.Drop()

} else {

out = r.out

buffer = append(buffer, batch)

out = r.out

active = buffer[0]

activeSize = len(active.events)

if !consumerBlocked {

consumerBlocked = blockConsumer(numOutputs, len(buffer))

if consumerBlocked {

log.Info("retryer: send wait signal to consumer")

r.consumer.sigWait()

log.Info(" done")

}

}

}

case out <- active:

r.observer.eventsRetry(activeSize)

buffer = buffer[1:]

active, activeSize = nil, 0

if len(buffer) == 0 {

out = nil

} else {

active = buffer[0]

activeSize = len(active.events)

}

if consumerBlocked {

consumerBlocked = blockConsumer(numOutputs, len(buffer))

if !consumerBlocked {

log.Info("retryer: send unwait-signal to consumer")

r.consumer.sigUnWait()

log.Info(" done")

}

}

case sig := <-r.sig:

switch sig.tag {

case sigRetryerUpdateOutput:

r.out = sig.channel

case sigRetryerOutputAdded:

numOutputs++

case sigRetryerOutputRemoved:

numOutputs--

}

}

}

}

然后对out进行了设置

func (c *outputController) Set(outGrp outputs.Group) {

// create new outputGroup with shared work queue

clients := outGrp.Clients

queue := makeWorkQueue()

worker := make([]outputWorker, len(clients))

for i, client := range clients {

worker[i] = makeClientWorker(c.observer, queue, client)

}

grp := &outputGroup{

workQueue: queue,

outputs: worker,

timeToLive: outGrp.Retry + 1,

batchSize: outGrp.BatchSize,

}

// update consumer and retryer

c.consumer.sigPause()

if c.out != nil {

for range c.out.outputs {

c.retryer.sigOutputRemoved()

}

}

c.retryer.updOutput(queue)

for range clients {

c.retryer.sigOutputAdded()

}

c.consumer.updOutput(grp)

// close old group, so events are send to new workQueue via retryer

if c.out != nil {

for _, w := range c.out.outputs {

w.Close()

}

}

c.out = grp

// restart consumer (potentially blocked by retryer)

c.consumer.sigContinue()

c.observer.updateOutputGroup()

}

这边就是对上面创建的kafka的每个client创建一个监控程序makeClientWorker

func makeClientWorker(observer outputObserver, qu workQueue, client outputs.Client) outputWorker {

if nc, ok := client.(outputs.NetworkClient); ok {

c := &netClientWorker{observer: observer, qu: qu, client: nc}

go c.run()

return c

}

c := &clientWorker{observer: observer, qu: qu, client: client}

go c.run()

return c

}

然后就用于监控workQueue的数据,有数据就通过client的push发送到kafka,到这边pipeline的初始化也就结束了。

日志收集

Filebeat 不仅支持普通文本日志的作为输入源,还内置支持了 redis 的慢查询日志、stdin、tcp 和 udp 等作为输入源。

本文只分析下普通文本日志的处理方式,对于普通文本日志,可以按照以下配置方式,指定 log 的输入源信息。

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/*.log

其中 Input 也可以指定多个, 每个 Input 下的 Log 也可以指定多个。

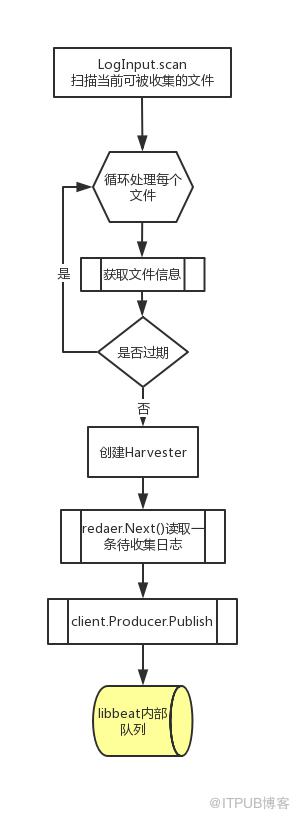

从收集日志、到发送事件到publisher,其数据流如下图所示:

filebeat 启动时会开启 Crawler,filebeat抽象出一个Crawler的结构体,对于配置中的每条 Input,Crawler 都会启动一个 Input 进行处理,代码如下所示:

func (c *Crawler) Start(...){

...

for _, inputConfig := range c.inputConfigs {

err := c.startInput(pipeline, inputConfig, r.GetStates())

if err != nil {

return err

}

}

...

}

然后就是创建input,比如我们采集的是log类型的,就是调用log的NewInput来创建,并且启动,定时进行扫描

p, err := input.New(config, connector, c.beatDone, states, nil)

会根据采集日志的类型来进行注册调用

// NewInput instantiates a new Log

func NewInput(

cfg *common.Config,

outlet channel.Connector,

context input.Context,

) (input.Input, error) {

cleanupNeeded := true

cleanupIfNeeded := func(f func() error) {

if cleanupNeeded {

f()

}

}

// Note: underlying output.

// The input and harvester do have different requirements

// on the timings the outlets must be closed/unblocked.

// The outlet generated here is the underlying outlet, only closed

// once all workers have been shut down.

// For state updates and events, separate sub-outlets will be used.

out, err := outlet.ConnectWith(cfg, beat.ClientConfig{

Processing: beat.ProcessingConfig{

DynamicFields: context.DynamicFields,

},

})

if err != nil {

return nil, err

}

defer cleanupIfNeeded(out.Close)

// stateOut will only be unblocked if the beat is shut down.

// otherwise it can block on a full publisher pipeline, so state updates

// can be forwarded correctly to the registrar.

stateOut := channel.CloseOnSignal(channel.SubOutlet(out), context.BeatDone)

defer cleanupIfNeeded(stateOut.Close)

meta := context.Meta

if len(meta) == 0 {

meta = nil

}

p := &Input{

config: defaultConfig,

cfg: cfg,

harvesters: harvester.NewRegistry(),

outlet: out,

stateOutlet: stateOut,

states: file.NewStates(),

done: context.Done,

meta: meta,

}

if err := cfg.Unpack(&p.config); err != nil {

return nil, err

}

if err := p.config.resolveRecursiveGlobs(); err != nil {

return nil, fmt.Errorf("Failed to resolve recursive globs in config: %v", err)

}

if err := p.config.normalizeGlobPatterns(); err != nil {

return nil, fmt.Errorf("Failed to normalize globs patterns: %v", err)

}

// Create empty harvester to check if configs are fine

// TODO: Do config validation instead

_, err = p.createHarvester(file.State{}, nil)

if err != nil {

return nil, err

}

if len(p.config.Paths) == 0 {

return nil, fmt.Errorf("each input must have at least one path defined")

}

err = p.loadStates(context.States)

if err != nil {

return nil, err

}

logp.Info("Configured paths: %v", p.config.Paths)

cleanupNeeded = false

go p.stopWhenDone()

return p, nil

}

最后会调用这个结构体的run函数进行扫描,主要是调用

p.scan()

// Scan starts a scanGlob for each provided path/glob

func (p *Input) scan() {

var sortInfos []FileSortInfo

var files []string

paths := p.getFiles()

var err error

if p.config.ScanSort != "" {

sortInfos, err = getSortedFiles(p.config.ScanOrder, p.config.ScanSort, getSortInfos(paths))

if err != nil {

logp.Err("Failed to sort files during scan due to error %s", err)

}

}

if sortInfos == nil {

files = getKeys(paths)

}

for i := 0; i < len(paths); i++ {

var path string

var info os.FileInfo

if sortInfos == nil {

path = files[i]

info = paths[path]

} else {

path = sortInfos[i].path

info = sortInfos[i].info

}

select {

case <-p.done:

logp.Info("Scan aborted because input stopped.")

return

default:

}

newState, err := getFileState(path, info, p)

if err != nil {

logp.Err("Skipping file %s due to error %s", path, err)

}

// Load last state

lastState := p.states.FindPrevious(newState)

// Ignores all files which fall under ignore_older

if p.isIgnoreOlder(newState) {

logp.Debug("input","ignore")

err := p.handleIgnoreOlder(lastState, newState)

if err != nil {

logp.Err("Updating ignore_older state error: %s", err)

}

//close(p.done)

continue

}

// Decides if previous state exists

if lastState.IsEmpty() {

logp.Debug("input", "Start harvester for new file: %s", newState.Source)

err := p.startHarvester(newState, 0)

if err == errHarvesterLimit {

logp.Debug("input", harvesterErrMsg, newState.Source, err)

continue

}

if err != nil {

logp.Err(harvesterErrMsg, newState.Source, err)

}

} else {

p.harvestExistingFile(newState, lastState)

}

}

}

进行扫描过滤。由于指定的 paths 可以配置多个,而且可以是 Glob 类型,因此 Filebeat 将会匹配到多个配置文件。

根据配置获取匹配的日志文件,需要注意的是,这里的匹配方式并非正则,而是采用linux glob的规则,和正则还是有一些区别。

matches, err := filepath.Glob(path)

获取到了所有匹配的日志文件之后,会经过一些复杂的过滤,例如如果配置了exclude_files则会忽略这类文件,同时还会查询文件的状态,如果文件的最近一次修改时间大于ignore_older的配置,也会不去采集该文件。

还会对文件进行处理,获取每个文件的状态,构建新的state结构,

newState, err := getFileState(path, info, p)

if err != nil {

logp.Err("Skipping file %s due to error %s", path, err)

}

func getFileState(path string, info os.FileInfo, p *Input) (file.State, error) {

var err error

var absolutePath string

absolutePath, err = filepath.Abs(path)

if err != nil {

return file.State{}, fmt.Errorf("could not fetch abs path for file %s: %s", absolutePath, err)

}

logp.Debug("input", "Check file for harvesting: %s", absolutePath)

// Create new state for comparison

newState := file.NewState(info, absolutePath, p.config.Type, p.meta, p.cfg.GetField("brokerlist"), p.cfg.GetField("topic"))

return newState, nil

}

同时在已经存在的状态中进行对比,如果获取到对于的状态就不重新启动协程进行采集,如果获取一个新的状态就开启新的协程进行采集。

// Load last state

lastState := p.states.FindPrevious(newState)

Input对象创建时会从registry读取文件状态(主要是offset), 对于每个匹配到的文件,都会开启一个 Harvester 进行逐行读取,每个 Harvester 都工作在自己的的 goroutine 中。

err := p.startHarvester(newState, 0)

if err == errHarvesterLimit {

logp.Debug("input", harvesterErrMsg, newState.Source, err)

continue

}

if err != nil {

logp.Err(harvesterErrMsg, newState.Source, err)

}

我们来看看startHarvester

// startHarvester starts a new harvester with the given offset

// In case the HarvesterLimit is reached, an error is returned

func (p *Input) startHarvester(state file.State, offset int64) error {

if p.numHarvesters.Inc() > p.config.HarvesterLimit && p.config.HarvesterLimit > 0 {

p.numHarvesters.Dec()

harvesterSkipped.Add(1)

return errHarvesterLimit

}

// Set state to "not" finished to indicate that a harvester is running

state.Finished = false

state.Offset = offset

// Create harvester with state

h, err := p.createHarvester(state, func() { p.numHarvesters.Dec() })

if err != nil {

p.numHarvesters.Dec()

return err

}

err = h.Setup()

if err != nil {

p.numHarvesters.Dec()

return fmt.Errorf("error setting up harvester: %s", err)

}

// Update state before staring harvester

// This makes sure the states is set to Finished: false

// This is synchronous state update as part of the scan

h.SendStateUpdate()

if err = p.harvesters.Start(h); err != nil {

p.numHarvesters.Dec()

}

return err

}

首先创建了Harvester

// createHarvester creates a new harvester instance from the given state

func (p *Input) createHarvester(state file.State, onTerminate func()) (*Harvester, error) {

// Each wraps the outlet, for closing the outlet individually

h, err := NewHarvester(

p.cfg,

state,

p.states,

func(state file.State) bool {

return p.stateOutlet.OnEvent(beat.Event{Private: state})

},

subOutletWrap(p.outlet),

)

if err == nil {

h.onTerminate = onTerminate

}

return h, err

}

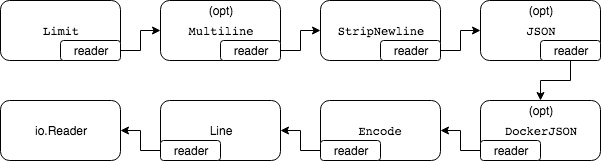

harvester启动时会通过Setup方法创建一系列reader形成读处理链

关于log类型的reader处理链,如下图所示:

opt表示根据配置决定是否创建该reader

Reader包括:

- Line: 包含os.File,用于从指定offset开始读取日志行。虽然位于处理链的最内部,但其Next函数中实际的处理逻辑(读文件行)却是最新被执行的。

- Encode: 包含Line Reader,将其读取到的行生成Message结构后返回

- JSON, DockerJSON: 将json形式的日志内容decode成字段

- StripNewLine:去除日志行尾部的空白符

- Multiline: 用于读取多行日志

- Limit: 限制单行日志字节数

除了Line Reader外,这些reader都实现了Reader接口:

type Reader interface {

Next() (Message, error)

}

Reader通过内部包含Reader对象的方式,使Reader形成一个处理链,其实这就是设计模式中的责任链模式。

各Reader的Next方法的通用形式像是这样:Next方法调用内部Reader对象的Next方法获取Message,然后处理后返回。

func (r *SomeReader) Next() (Message, error) {

message, err := r.reader.Next()

if err != nil {

return message, err

}

// do some processing...

return message, nil

}

其实Harvester 的工作流程非常简单,harvester从registry记录的文件位置开始读取,就是逐行读取文件,并更新该文件暂时在 Input 中的文件偏移量(注意,并不是 Registrar 中的偏移量),读取完成(读到文件的EOF末尾),组装成事件(beat.Event)后发给Publisher。主要是调用了Harvester的run方法,部分如下:

for {

select {

case <-h.done:

return nil

default:

}

message, err := h.reader.Next()

if err != nil {

switch err {

case ErrFileTruncate:

logp.Info("File was truncated. Begin reading file from offset 0: %s", h.state.Source)

h.state.Offset = 0

filesTruncated.Add(1)

case ErrRemoved:

logp.Info("File was removed: %s. Closing because close_removed is enabled.", h.state.Source)

case ErrRenamed:

logp.Info("File was renamed: %s. Closing because close_renamed is enabled.", h.state.Source)

case ErrClosed:

logp.Info("Reader was closed: %s. Closing.", h.state.Source)

case io.EOF:

logp.Info("End of file reached: %s. Closing because close_eof is enabled.", h.state.Source)

case ErrInactive:

logp.Info("File is inactive: %s. Closing because close_inactive of %v reached.", h.state.Source, h.config.CloseInactive)

case reader.ErrLineUnparsable:

logp.Info("Skipping unparsable line in file: %v", h.state.Source)

//line unparsable, go to next line

continue

default:

logp.Err("Read line error: %v; File: %v", err, h.state.Source)

}

return nil

}

// Get copy of state to work on

// This is important in case sending is not successful so on shutdown

// the old offset is reported

state := h.getState()

startingOffset := state.Offset

state.Offset += int64(message.Bytes)

// Stop harvester in case of an error

if !h.onMessage(forwarder, state, message, startingOffset) {

return nil

}

// Update state of harvester as successfully sent

h.state = state

}

可以看到,reader.Next()方法会不停的读取日志,如果没有返回异常,则发送日志数据到缓存队列中。

返回的异常有几种类型,除了读取到EOF外,还会有例如文件一段时间不活跃等情况发生会使harvester goroutine退出,不再采集该文件,并关闭文件句柄。 filebeat为了防止占据过多的采集日志文件的文件句柄,默认的close_inactive参数为5min,如果日志文件5min内没有被修改,上面代码会进入ErrInactive的case,之后该harvester goroutine会被关闭。 这种场景下还需要注意的是,如果某个文件日志采集中被移除了,但是由于此时被filebeat保持着文件句柄,文件占据的磁盘空间会被保留直到harvester goroutine结束。

不同的harvester goroutine采集到的日志数据都会发送至一个全局的队列queue中,queue的实现有两种:基于内存和基于磁盘的队列,目前基于磁盘的队列还是处于alpha阶段,filebeat默认启用的是基于内存的缓存队列。 每当队列中的数据缓存到一定的大小或者超过了定时的时间(默认1s),会被注册的client从队列中消费,发送至配置的后端。目前可以设置的client有kafka、elasticsearch、redis等。

同时,我们需要考虑到,日志型的数据其实是在不断增长和变化的:

会有新的日志在不断产生

可能一个日志文件对应的 Harvester 退出后,又再次有了内容更新。

为了解决这两个情况,filebeat 采用了 Input 定时扫描的方式。代码如下,可以看出,Input 扫描的频率是由用户指定的 scan_frequency 配置来决定的 (默认 10s 扫描一次)。

func (p *Runner) Run() {

p.input.Run()

if p.Once {

return

}

for {

select {

case <-p.done:

logp.Info("input ticker stopped")

return

case <-time.After(p.config.ScanFrequency): // 定时扫描

logp.Debug("input", "Run input")

p.input.Run()

}

}

}

此外,如果用户启动时指定了 –once 选项,则扫描只会进行一次,就退出了。

使用一个简单的流程图可以这样表示

处理文件重命名,删除,截断

- 获取文件信息时会获取文件的device id + indoe作为文件的唯一标识;

- 文件收集进度会被持久化,这样当创建Harvester时,首先会对文件作openFile, 以 device id + inode为key在持久化文件中查看当前文件是否被收集过,收集到了什么位置,然后断点续传

- 在读取过程中,如果文件被截断,认为文件已经被同名覆盖,将从头开始读取文件

- 如果文件被删除,因为原文件已被打开,不影响继续收集,但如果设置了CloseRemoved, 则不会再继续收集

- 如果文件被重命名,因为原文件已被打开,不影响继续收集,但如果设置了CloseRenamed , 则不会再继续收集

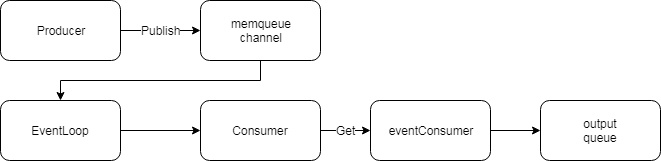

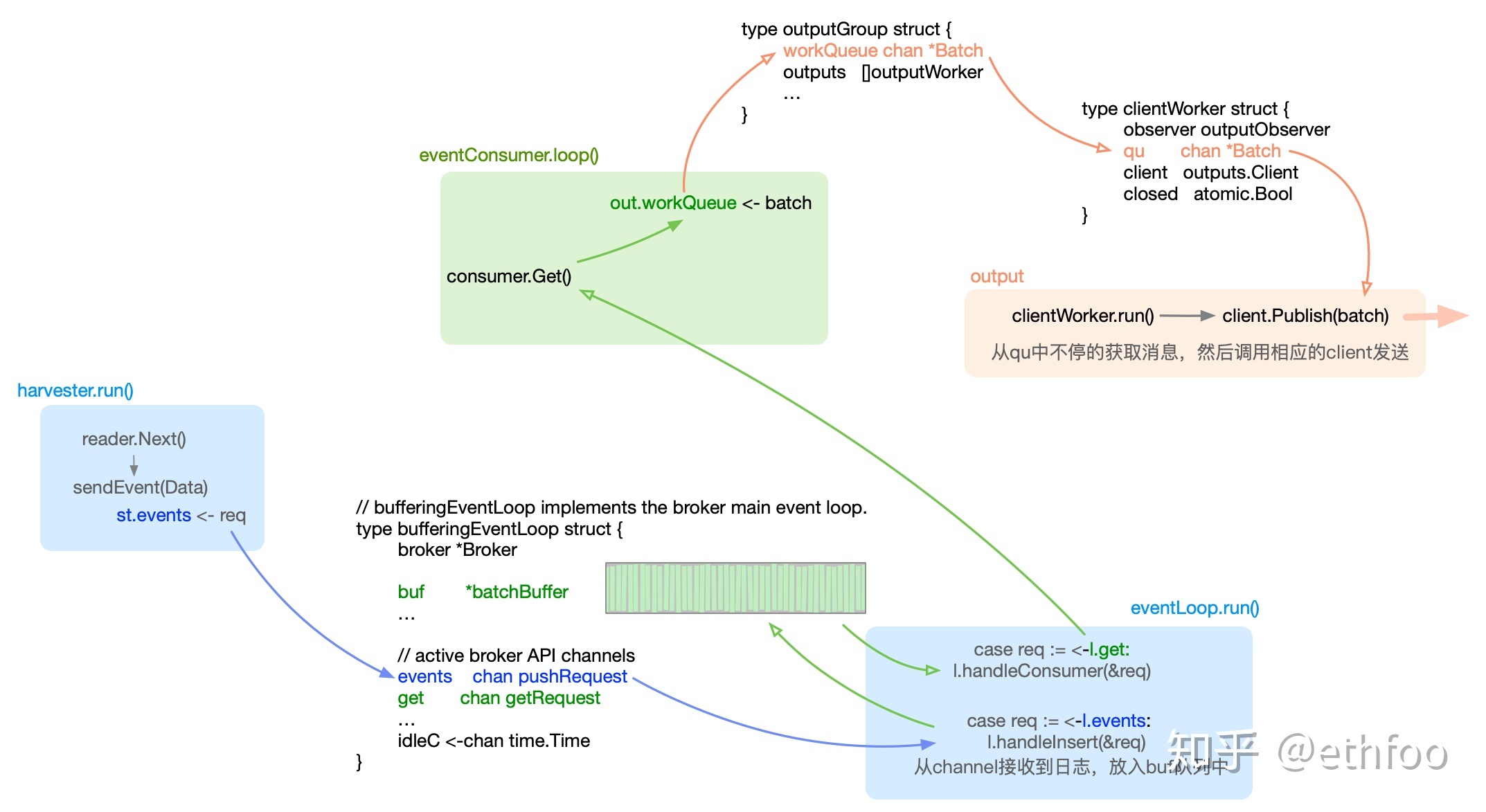

pipeline调度

至此,我们可以清楚的知道,Filebeat 是如何采集日志文件。而日志采集过程,Harvest 会将数据写到 Pipeline 中。我们接下来看下数据是如何写入到 Pipeline 中的。

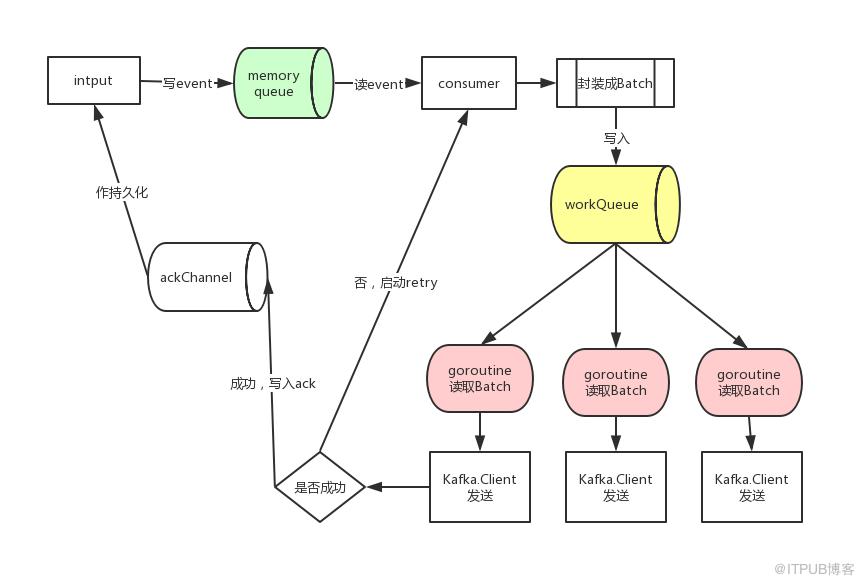

Haveseter 会将数据写入缓存中,而另一方面 Output 会从缓存将数据读走。整个生产消费的过程都是由 Pipeline 进行调度的,而整个调度过程也非常复杂。

不同的harvester goroutine采集到的日志数据都会发送至一个全局的队列queue中,Filebeat 的缓存queue目前分为 memqueue 和 spool。memqueue 顾名思义就是内存缓存,spool 则是将数据缓存到磁盘中。本文将基于 memqueue 讲解整个调度过程。

在下面的pipeline的写入和消费中,在client.go在(*client) publish方法中我们可以看到,事件是通过调用c.producer.Publish(pubEvent)被实际发送的,而producer则通过具体Queue的Producer方法生成。

队列对象被包含在pipeline.go:Pipeline结构中,其接口的定义如下:

type Queue interface {

io.Closer

BufferConfig() BufferConfig

Producer(cfg ProducerConfig) Producer

Consumer() Consumer

}

主要的,Producer方法生成Producer对象,用于向队列中push事件;Consumer方法生成Consumer对象,用于从队列中取出事件。Producer和Consumer接口定义如下:

type Producer interface {

Publish(event publisher.Event) bool

TryPublish(event publisher.Event) bool

Cancel() int

}

type Consumer interface {

Get(sz int) (Batch, error)

Close() error

}

在配置中没有指定队列配置时,默认使用了memqueue作为队列实现,下面我们来看看memqueue及其对应producer和consumer定义:

Broker结构(memqueue在代码中实际对应的结构名是Broker):

type Broker struct {

done chan struct{}

logger logger

bufSize int

// buf brokerBuffer

// minEvents int

// idleTimeout time.Duration

// api channels

events chan pushRequest

requests chan getRequest

pubCancel chan producerCancelRequest

// internal channels

acks chan int

scheduledACKs chan chanList

eventer queue.Eventer

// wait group for worker shutdown

wg sync.WaitGroup

waitOnClose bool

}

根据是否需要ack分为forgetfullProducer和ackProducer两种producer:

type forgetfullProducer struct {

broker *Broker

openState openState

}

type ackProducer struct {

broker *Broker

cancel bool

seq uint32

state produceState

openState openState

}

consumer结构:

type consumer struct {

broker *Broker

resp chan getResponse

done chan struct{}

closed atomic.Bool

}

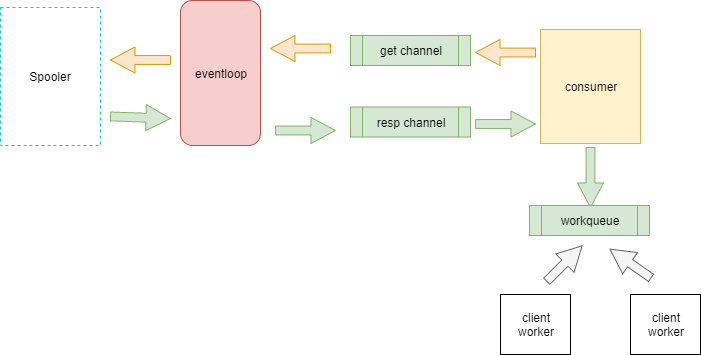

queue、producer、consumer三者关系的运作方式如下图所示:

- Producer通过Publish或TryPublish事件放入Broker的队列,即结构中的channel对象evetns

- Broker的主事件循环EventLoop将(请求)事件从events channel取出,放入自身结构体对象ringBuffer中。主事件循环有两种类型:

- 直接(不带buffer)事件循环结构directEventLoop:收到事件后尽可能快的转发;

- 带buffer事件循环结构bufferingEventLoop:当buffer满或刷新超时时转发。具体使用哪一种取决于memqueue配置项flush.min_events,大于1时使用后者,否则使用前者。

- eventConsumer调用Consumer的Get方法获取事件:

- 首先将获取事件请求(包括请求事件数和用于存放其响应事件的channel resp)放入Broker的请求队列requests中,等待主事件循环EventLoop处理后将事件放入resp;

- 获取resp的事件,组装成batch结构后返回

- eventConsumer将事件放入output对应队列中

这部分关于事件在队列中各种channel间的流转,笔者认为是比较消耗性能的,但不清楚设计者这样设计的考量是什么。

另外值得思考的是,在多个go routine使用队列交互的场景下,libbeat中都使用了go语言channel作为其底层的队列,它是否可以完全替代加锁队列的使用呢?

Pipeline 的写入

在Crawler收集日志并转换成事件后,我们继续发送数据,其就会通过调用Publisher对应client的Publish接口将事件送到Publisher,后续的处理流程也都将由libbeat完成,事件的流转如下图所示:

我们首先看一下事件处理器processor

在harvester调用client.Publish接口时,其内部会使用配置中定义的processors对事件进行处理,然后才将事件发送到Publisher队列。

processor包含两种:在Input内定义作为局部(Input独享)的processor,其只对该Input产生的事件生效;在顶层配置中定义作为全局processor,其对全部事件生效。其对应的代码实现方式是: filebeat在使用libbeat pipeline的ConnectWith接口创建client时(factory.go中(*OutletFactory)Create函数),会将Input内部的定义processor作为参数传递给ConnectWith接口。而在ConnectWith实现中,会将参数中的processor和全局processor(在创建pipeline时生成)合并。从这里读者也可以发现,实际上每个Input都独享一个client,其包含一些Input自身的配置定义逻辑。

任一Processor都实现了Processor接口:Run函数包含处理逻辑,String返回Processor名。

type Processor interface {

Run(event *beat.Event) (*beat.Event, error)

String() string

}

我们再看下 Haveseter 是如何将数据写入缓存中的,如下图所示:

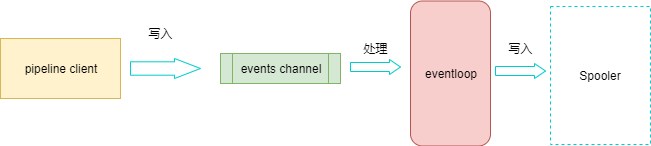

Harvester 通过 pipeline 提供的 pipelineClient 将数据写入到 pipeline 中,Haveseter 会将读到的数据会包装成一个 Event 结构体,再递交给 pipeline。

在 Filebeat 的实现中,pipelineClient 并不直接操作缓存,而是将 event 先写入一个 events channel 中。

同时,有一个 eventloop 组件,会监听 events channel 的事件到来,等 event 到达时,eventloop 会将其放入缓存中。

当缓存满的时候,eventloop 直接移除对该 channel 的监听。

每次 event ACK 或者取消后,缓存不再满了,则 eventloop 会重新监听 events channel。

// onMessage processes a new message read from the reader.

// This results in a state update and possibly an event would be send.

// A state update first updates the in memory state held by the prospector,

// and finally sends the file.State indirectly to the registrar.

// The events Private field is used to forward the file state update.

//

// onMessage returns 'false' if it was interrupted in the process of sending the event.

// This normally signals a harvester shutdown.

func (h *Harvester) onMessage(

forwarder *harvester.Forwarder,

state file.State,

message reader.Message,

messageOffset int64,

) bool {

if h.source.HasState() {

h.states.Update(state)

}

text := string(message.Content)

if message.IsEmpty() || !h.shouldExportLine(text) {

// No data or event is filtered out -> send empty event with state update

// only. The call can fail on filebeat shutdown.

// The event will be filtered out, but forwarded to the registry as is.

err := forwarder.Send(beat.Event{Private: state})

return err == nil

}

fields := common.MapStr{

"log": common.MapStr{

"offset": messageOffset, // Offset here is the offset before the starting char.

"file": common.MapStr{

"path": state.Source,

},

},

}

fields.DeepUpdate(message.Fields)

// Check if json fields exist

var jsonFields common.MapStr

if f, ok := fields["json"]; ok {

jsonFields = f.(common.MapStr)

}

var meta common.MapStr

timestamp := message.Ts

if h.config.JSON != nil && len(jsonFields) > 0 {

id, ts := readjson.MergeJSONFields(fields, jsonFields, &text, *h.config.JSON)

if !ts.IsZero() {

// there was a `@timestamp` key in the event, so overwrite

// the resulting timestamp

timestamp = ts

}

if id != "" {

meta = common.MapStr{

"id": id,

}

}

} else if &text != nil {

if fields == nil {

fields = common.MapStr{}

}

fields["message"] = text

}

err := forwarder.Send(beat.Event{

Timestamp: timestamp,

Fields: fields,

Meta: meta,

Private: state,

})

return err == nil

}

将数据包装成event直接通过send方法将数据发出去

// Send updates the input state and sends the event to the spooler

// All state updates done by the input itself are synchronous to make sure no states are overwritten

func (f *Forwarder) Send(event beat.Event) error {

ok := f.Outlet.OnEvent(event)

if !ok {

logp.Info("Input outlet closed")

return errors.New("input outlet closed")

}

return nil

}

调用Outlet.OnEvent发送data

点进去发现是一个接口

type Outlet interface {

OnEvent(data *util.Data) bool

}

经过调试观察,elastic\beats\filebeat\channel\outlet.go实现了这个接口

outlet在Harvester的run一开始就创建了

outlet := channel.CloseOnSignal(h.outletFactory(), h.done)

forwarder := harvester.NewForwarder(outlet)

所以调用的OnEvent

func (o *outlet) OnEvent(event beat.Event) bool {

if !o.isOpen.Load() {

return false

}

if o.wg != nil {

o.wg.Add(1)

}

o.client.Publish(event)

// Note: race condition on shutdown:

// The underlying beat.Client is asynchronous. Without proper ACK

// handler we can not tell if the event made it 'through' or the client

// close has been completed before sending. In either case,

// we report 'false' here, indicating the event eventually being dropped.

// Returning false here, prevents the harvester from updating the state

// to the most recently published events. Therefore, on shutdown the harvester

// might report an old/outdated state update to the registry, overwriting the

// most recently

// published offset in the registry on shutdown.

return o.isOpen.Load()

}

通过client.Publish发送数据,client也是一个接口

type Client interface {

Publish(Event)

PublishAll([]Event)

Close() error

}

调试之后,client使用的是elastic\beats\libbeat\publisher\pipeline\client.go的client对象

我们来看一下这个client是通过Harvester的参数outletFactory来初始化的,我们来看一下NewHarvester初始化的时候也就是在createHarvester的时候传递的参数

// createHarvester creates a new harvester instance from the given state

func (p *Input) createHarvester(state file.State, onTerminate func()) (*Harvester, error) {

// Each wraps the outlet, for closing the outlet individually

h, err := NewHarvester(

p.cfg,

state,

p.states,

func(state file.State) bool {

return p.stateOutlet.OnEvent(beat.Event{Private: state})

},

subOutletWrap(p.outlet),

)

if err == nil {

h.onTerminate = onTerminate

}

return h, err

}

就是subOutletWrap中的参数p.outlet那就要看以下Input初始化的的时候NewInput传递的参数

// NewInput instantiates a new Log

func NewInput(

cfg *common.Config,

outlet channel.Connector,

context input.Context,

) (input.Input, error) {

cleanupNeeded := true

cleanupIfNeeded := func(f func() error) {

if cleanupNeeded {

f()

}

}

// Note: underlying output.

// The input and harvester do have different requirements

// on the timings the outlets must be closed/unblocked.

// The outlet generated here is the underlying outlet, only closed

// once all workers have been shut down.

// For state updates and events, separate sub-outlets will be used.

out, err := outlet.ConnectWith(cfg, beat.ClientConfig{

Processing: beat.ProcessingConfig{

DynamicFields: context.DynamicFields,

},

})

if err != nil {

return nil, err

}

defer cleanupIfNeeded(out.Close)

// stateOut will only be unblocked if the beat is shut down.

// otherwise it can block on a full publisher pipeline, so state updates

// can be forwarded correctly to the registrar.

stateOut := channel.CloseOnSignal(channel.SubOutlet(out), context.BeatDone)

defer cleanupIfNeeded(stateOut.Close)

meta := context.Meta

if len(meta) == 0 {

meta = nil

}

p := &Input{

config: defaultConfig,

cfg: cfg,

harvesters: harvester.NewRegistry(),

outlet: out,

stateOutlet: stateOut,

states: file.NewStates(),

done: context.Done,

meta: meta,

}

if err := cfg.Unpack(&p.config); err != nil {

return nil, err

}

if err := p.config.resolveRecursiveGlobs(); err != nil {

return nil, fmt.Errorf("Failed to resolve recursive globs in config: %v", err)

}

if err := p.config.normalizeGlobPatterns(); err != nil {

return nil, fmt.Errorf("Failed to normalize globs patterns: %v", err)

}

// Create empty harvester to check if configs are fine

// TODO: Do config validation instead

_, err = p.createHarvester(file.State{}, nil)

if err != nil {

return nil, err

}

if len(p.config.Paths) == 0 {

return nil, fmt.Errorf("each input must have at least one path defined")

}

err = p.loadStates(context.States)

if err != nil {

return nil, err

}

logp.Info("Configured paths: %v", p.config.Paths)

cleanupNeeded = false

go p.stopWhenDone()

return p, nil

}

主要看outlet,这个是在Crawler的startInput的时候进行初始化

func (c *Crawler) startInput(

pipeline beat.Pipeline,

config *common.Config,

states []file.State,

) error {

if !config.Enabled() {

return nil

}

connector := c.out(pipeline)

p, err := input.New(config, connector, c.beatDone, states, nil)

if err != nil {

return fmt.Errorf("Error while initializing input: %s", err)

}

p.Once = c.once

if _, ok := c.inputs[p.ID]; ok {

return fmt.Errorf("Input with same ID already exists: %d", p.ID)

}

c.inputs[p.ID] = p

p.Start()

return nil

}

看out就是crawler创建new的时候传递的值

crawler, err := crawler.New(

channel.NewOutletFactory(outDone, wgEvents, b.Info).Create,

config.Inputs,

b.Info.Version,

fb.done,

*once)

就是create返回的pipelineConnector结构体

func (f *OutletFactory) Create(p beat.Pipeline) Connector {

return &pipelineConnector{parent: f, pipeline: p}

}

看pipelineConnector的ConnectWith函数

func (c *pipelineConnector) ConnectWith(cfg *common.Config, clientCfg beat.ClientConfig) (Outleter, error) {

config := inputOutletConfig{}

if err := cfg.Unpack(&config); err != nil {

return nil, err

}

procs, err := processorsForConfig(c.parent.beatInfo, config, clientCfg)

if err != nil {

return nil, err

}

setOptional := func(to common.MapStr, key string, value string) {

if value != "" {

to.Put(key, value)

}

}

meta := clientCfg.Processing.Meta.Clone()

fields := clientCfg.Processing.Fields.Clone()

serviceType := config.ServiceType

if serviceType == "" {

serviceType = config.Module

}

setOptional(meta, "pipeline", config.Pipeline)

setOptional(fields, "fileset.name", config.Fileset)

setOptional(fields, "service.type", serviceType)

setOptional(fields, "input.type", config.Type)

if config.Module != "" {

event := common.MapStr{"module": config.Module}

if config.Fileset != "" {

event["dataset"] = config.Module + "." + config.Fileset

}

fields["event"] = event

}

mode := clientCfg.PublishMode

if mode == beat.DefaultGuarantees {

mode = beat.GuaranteedSend

}

// connect with updated configuration

clientCfg.PublishMode = mode

clientCfg.Processing.EventMetadata = config.EventMetadata

clientCfg.Processing.Meta = meta

clientCfg.Processing.Fields = fields

clientCfg.Processing.Processor = procs

clientCfg.Processing.KeepNull = config.KeepNull

client, err := c.pipeline.ConnectWith(clientCfg)

if err != nil {

return nil, err

}

outlet := newOutlet(client, c.parent.wgEvents)

if c.parent.done != nil {

return CloseOnSignal(outlet, c.parent.done), nil

}

return outlet, nil

}

这边获取到了pipeline的客户端client

// ConnectWith create a new Client for publishing events to the pipeline.

// The client behavior on close and ACK handling can be configured by setting

// the appropriate fields in the passed ClientConfig.

// If not set otherwise the defaut publish mode is OutputChooses.

func (p *Pipeline) ConnectWith(cfg beat.ClientConfig) (beat.Client, error) {

var (

canDrop bool

dropOnCancel bool

eventFlags publisher.EventFlags

)

err := validateClientConfig(&cfg)

if err != nil {

return nil, err

}

p.eventer.mutex.Lock()

p.eventer.modifyable = false

p.eventer.mutex.Unlock()

switch cfg.PublishMode {

case beat.GuaranteedSend:

eventFlags = publisher.GuaranteedSend

dropOnCancel = true

case beat.DropIfFull:

canDrop = true

}

waitClose := cfg.WaitClose

reportEvents := p.waitCloser != nil

switch p.waitCloseMode {

case NoWaitOnClose:

case WaitOnClientClose:

if waitClose <= 0 {

waitClose = p.waitCloseTimeout

}

}

processors, err := p.createEventProcessing(cfg.Processing, publishDisabled)

if err != nil {

return nil, err

}

client := &client{

pipeline: p,

closeRef: cfg.CloseRef,

done: make(chan struct{}),

isOpen: atomic.MakeBool(true),

eventer: cfg.Events,

processors: processors,

eventFlags: eventFlags,

canDrop: canDrop,

reportEvents: reportEvents,

}

acker := p.makeACKer(processors != nil, &cfg, waitClose, client.unlink)

producerCfg := queue.ProducerConfig{

// Cancel events from queue if acker is configured

// and no pipeline-wide ACK handler is registered.

DropOnCancel: dropOnCancel && acker != nil && p.eventer.cb == nil,

}

if reportEvents || cfg.Events != nil {

producerCfg.OnDrop = func(event beat.Event) {

if cfg.Events != nil {

cfg.Events.DroppedOnPublish(event)

}

if reportEvents {

p.waitCloser.dec(1)

}

}

}

if acker != nil {

producerCfg.ACK = acker.ackEvents

} else {

acker = newCloseACKer(nilACKer, client.unlink)

}

client.acker = acker

client.producer = p.queue.Producer(producerCfg)

p.observer.clientConnected()

if client.closeRef != nil {

p.registerSignalPropagation(client)

}

return client, nil

}

调用的就是client的Publish函数来发送数据,publish方法即发送日志的方法,如果需要在发送前改造日志格式,可在这里添加代码,如下面的解析日志代码。

func (c *client) Publish(e beat.Event) {

c.mutex.Lock()

defer c.mutex.Unlock()

c.publish(e)

}

func (c *client) publish(e beat.Event) {

var (

event = &e

publish = true

log = c.pipeline.monitors.Logger

)

c.onNewEvent()

if !c.isOpen.Load() {

// client is closing down -> report event as dropped and return

c.onDroppedOnPublish(e)

return

}

if c.processors != nil {

var err error

event, err = c.processors.Run(event)

publish = event != nil

if err != nil {

// TODO: introduce dead-letter queue?

log.Errorf("Failed to publish event: %v", err)

}

}

if event != nil {

e = *event

}

open := c.acker.addEvent(e, publish)

if !open {

// client is closing down -> report event as dropped and return

c.onDroppedOnPublish(e)

return

}

if !publish {

c.onFilteredOut(e)

return

}

e = *event

pubEvent := publisher.Event{

Content: e,

Flags: c.eventFlags,

}

if c.reportEvents {

c.pipeline.waitCloser.inc()

}

var published bool

if c.canDrop {

published = c.producer.TryPublish(pubEvent)

} else {

published = c.producer.Publish(pubEvent)

}

if published {

c.onPublished()

} else {

c.onDroppedOnPublish(e)

if c.reportEvents {

c.pipeline.waitCloser.dec(1)

}

}

}

在上面创建clinet的时候,创建了队列的生产者,也就是之前broker的Producer

func (b *Broker) Producer(cfg queue.ProducerConfig) queue.Producer {

return newProducer(b, cfg.ACK, cfg.OnDrop, cfg.DropOnCancel)

}

func newProducer(b *Broker, cb ackHandler, dropCB func(beat.Event), dropOnCancel bool) queue.Producer {

openState := openState{

log: b.logger,

isOpen: atomic.MakeBool(true),

done: make(chan struct{}),

events: b.events,

}

if cb != nil {

p := &ackProducer{broker: b, seq: 1, cancel: dropOnCancel, openState: openState}

p.state.cb = cb

p.state.dropCB = dropCB

return p

}

return &forgetfulProducer{broker: b, openState: openState}

}

也就是forgetfulProducer结构体,调用这个的Publish函数来发送数据

func (p *forgetfulProducer) Publish(event publisher.Event) bool {

return p.openState.publish(p.makeRequest(event))

}

func (st *openState) publish(req pushRequest) bool {

select {

case st.events <- req:

return true

case <-st.done:

st.events = nil

return false

}

}

将数据放到了forgetfulProducer的openState的events中。到此数据就算发送到pipeline中了。

上文在pipeline的初始化的时候,queue初始化一般默认都是BufferingEventLoop,即带缓冲的队列。BufferingEventLoop是一个实现了Broker、带有各种channel的结构,主要用于将日志发送至consumer消费。 BufferingEventLoop的run方法中,同样是一个无限循环,这里可以认为是一个日志事件的调度中心。

for {

select {

case <-broker.done:

return

case req := <-l.events: // producer pushing new event

l.handleInsert(&req)

case req := <-l.get: // consumer asking for next batch

l.handleConsumer(&req)

case count := <-l.acks:

l.handleACK(count)

case <-l.idleC:

l.idleC = nil

l.timer.Stop()

if l.buf.length() > 0 {

l.flushBuffer()

}

}

}

上文中harvester goroutine每次读取到日志数据之后,最终会被发送至bufferingEventLoop中的events chan pushRequest 的channel中,然后触发上面req := <-l.events的case,handleInsert方法会把数据添加至bufferingEventLoop的buf中,buf即memqueue实际缓存日志数据的队列,如果buf长度超过配置的最大值或者bufferingEventLoop中的timer定时器(默认1S)触发了case <-l.idleC,均会调用flushBuffer()方法。 flushBuffer()又会触发req := <-l.get的case,然后运行handleConsumer方法,该方法中最重要的是这一句代码:

req.resp <- getResponse{ackChan, events}

这里获取到了consumer消费者的response channel,然后发送数据给这个channel。真正到这,才会触发consumer对memqueue的消费。所以,其实memqueue并非一直不停的在被consumer消费,而是在memqueue通知consumer的时候才被消费,我们可以理解为一种脉冲式的发送

简单的来说就是,每当队列中的数据缓存到一定的大小或者超过了定时的时间(默认1s),会被注册的client从队列中消费,发送至配置的后端。

以上是 Pipeline 的写入过程,此时 event 已被写入到了缓存中。

但是 Output 是如何从缓存中拿到 event 数据的?